Computing Angle Between Vectors

by James W. Walker

9 December 2014, revised 5 June 2016

The Problem with the Dot Product

In 3D computer graphics programming, it is often necessary to compute the angle between two vectors. For years, I did that using the familiar formula for angle in terms of the dot product and the inverse cosine function:

$$\theta = \mathrm{acos}\left(\frac{\mathbf{u}•\mathbf{v}}{\left\| \mathbf{u} \right\|\; \left\| \mathbf{v} \right\|}\right)$$

For machine computation, you may want to write it with only one square root:

$$\theta = \mathrm{acos}\left(\frac{\mathbf{u}•\mathbf{v}}{\sqrt{\strut(\mathbf{u}•\mathbf{u})\,(\mathbf{v}•\mathbf{v})}}\right)$$

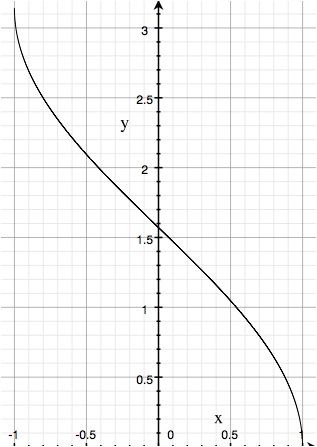

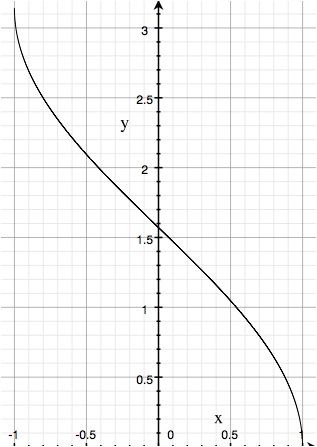

However, it has recently come to my attention (for instance via a blog post by John Blackburne) that this is not the most accurate formula, especially for vectors that are nearly parallel or opposite. Consider the graph of the inverse cosine, shown at right. Its singularities at 1 and -1 cause a problem. Let us consider the vectors \(\mathbf{u} = (1, 0, 0)\) and \(\mathbf{v} = (1, x, 0)\), and examine what happens when \(x\) is small relative to 1. In this case, the angle formula becomes:

However, it has recently come to my attention (for instance via a blog post by John Blackburne) that this is not the most accurate formula, especially for vectors that are nearly parallel or opposite. Consider the graph of the inverse cosine, shown at right. Its singularities at 1 and -1 cause a problem. Let us consider the vectors \(\mathbf{u} = (1, 0, 0)\) and \(\mathbf{v} = (1, x, 0)\), and examine what happens when \(x\) is small relative to 1. In this case, the angle formula becomes:

\[\theta = \mathrm{acos}\left(\frac{1}{\sqrt{1 + x^2}}\right) = \mathrm{acos}((1 + x^2)^{-1/2})\;.\]

Near zero, the function inside the inverse cosine

\[(1 + x^2)^{-1/2}\]

has the same second-order approximation as the cosine:

\[f(x) \;=\; 1 - \frac{1}{2}x^2\;.\]

Recall that a machine representation of floating-point numbers has a parameter \(\epsilon\) (the machine epsilon) such that the numbers \(1\) and \(1 + z\) have the same representation whenever \(|z| < \epsilon\). We could multiply by a nonzero number \(a\) and say that \(a\) and \(a(1+z)\) are indistinguishable when \(|z| < \epsilon\). If we let \(b =a(1+z)\) and solve for \(z\) in terms of \(a\) and \(b\), we can say that

\[a\mbox{ and }b\mbox{ are indistinguishable when }\left|\frac{b-a}{a}\right| < \epsilon\;.\]

Let's consider a small change in \(x\) in terms of replacing \(x\) by \(x(1 + z)\), which will be significant in the machine representation if \(|z| > \epsilon\). When does this produce a change in the machine representation of the function value? The values \(f(x)\) and \(f(x(1+z))\) will be indistinguishable when

\[\left|\frac{f(x(1+z)) - f(x)}{f(x)}\right|\; <\; \epsilon\;.\]

Let's work on that left hand side.

\[

\begin{align}

\frac{f(x(1+z)) - f(x)}{f(x)} & = \frac{1 - \frac{1}{2}(x(1+z))^2 - (1 - \frac{1}{2}x^2)}{1 - \frac{1}{2}x^2}\\

& = \frac{1 - \frac{1}{2}x^2 - \frac{1}{2}x^2(2z+z^2) - (1 - \frac{1}{2}x^2)}{1 - \frac{1}{2}x^2} \\

& = \frac{- \frac{1}{2}x^2(2z+z^2)}{1 - \frac{1}{2}x^2} \; .

\end{align}

\]

This has an absolute value less than \(\epsilon\) when

\[|2z+z^2|\; < \; \epsilon \left|\frac{1 - \frac{1}{2}x^2}{x^2}\right|\;.\]

What this tells us is that when \(x\) is small, a \(z\) value far greater than \(\epsilon\) can produce no detectable change in the output value of the function. Simply put, roundoff error is causing a loss of information, and the closer we get to zero, the worse it gets. For example, if \(x = 0.01\), \(f(x(1+z))\) will be indistinguishable from \(f(x)\) when

\[|2z+z^2|\; < \; 9999.5 \,\epsilon\;.\]

A Better Formula

Since we are trying to compute an angle, some sort of inverse trignonometric function seems inevitable. The inverse sine function has the same kind of singularities as the inverse cosine. But the inverse tangent is nice and smooth over the whole real domain, so we might seek a formula using the inverse tangent.

From the two well-known formulas

\[\mathbf{u}•\mathbf{v} = \|\mathbf{u}\| \, \|\mathbf{v}\| \cos(\theta)\; \mbox{and}\]

\[\|\mathbf{u}\times\mathbf{v}\| = \|\mathbf{u}\| \, \|\mathbf{v}\| \sin(\theta)\; \mbox{,}\]

we can deduce that

\[\tan(\theta) = \frac{\|\mathbf{u}\times\mathbf{v}\|}{\mathbf{u}•\mathbf{v}}\;.\]

We have to be a bit careful about turning that into a function involving the inverse tangent, since we are looking for an angle in the interval \([0, \pi]\) but the standard inverse tangent has the range \([-\pi/2, \pi/2]\). However, the standard C function library has a version of arc tangent that does just what we want:

\[\theta = \operatorname{atan2}\left( \|\mathbf{u}\times\mathbf{v}\|, \mathbf{u}•\mathbf{v} \right)\]

Incidentally, using atan2 also means that we need not worry about division by zero.

Even Better?

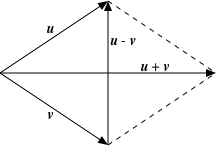

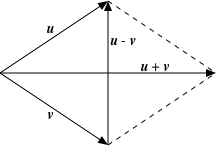

If the vectors \(\mathbf{u}\) and \(\mathbf{v}\) happen to have the same length, one can easily verify that \(\mathbf{u} + \mathbf{v}\) and \(\mathbf{u} - \mathbf{v}\) are orthogonal, and that the tangent of half the angle between \(\mathbf{u}\) and \(\mathbf{v}\) is \(\left\|\mathbf{u} - \mathbf{v}\right\| / \left\|\mathbf{u} + \mathbf{v}\right\|\). Therefore the angle between \(\mathbf{u}\) and \(\mathbf{v}\) is \(2\,\operatorname{atan2}\left(\left\|\mathbf{u} - \mathbf{v}\right\|, \left\|\mathbf{u} + \mathbf{v}\right\|\right)\). To handle the more general case, we can scale \(\mathbf{u}\) and \(\mathbf{v}\) by each other's lengths to get vectors of the same length:

If the vectors \(\mathbf{u}\) and \(\mathbf{v}\) happen to have the same length, one can easily verify that \(\mathbf{u} + \mathbf{v}\) and \(\mathbf{u} - \mathbf{v}\) are orthogonal, and that the tangent of half the angle between \(\mathbf{u}\) and \(\mathbf{v}\) is \(\left\|\mathbf{u} - \mathbf{v}\right\| / \left\|\mathbf{u} + \mathbf{v}\right\|\). Therefore the angle between \(\mathbf{u}\) and \(\mathbf{v}\) is \(2\,\operatorname{atan2}\left(\left\|\mathbf{u} - \mathbf{v}\right\|, \left\|\mathbf{u} + \mathbf{v}\right\|\right)\). To handle the more general case, we can scale \(\mathbf{u}\) and \(\mathbf{v}\) by each other's lengths to get vectors of the same length:

\[

\theta = 2\, \operatorname{atan2}\left(

\left\| \, \|\mathbf{v}\|\,\mathbf{u} - \|\mathbf{u}\|\,\mathbf{v}\, \right\|,

\left\|\, \|\mathbf{v}\|\,\mathbf{u} + \|\mathbf{u}\|\,\mathbf{v}\,\right\|

\right)

\]

I have read a hand-waving argument that this formula is better than the one involving the cross product, due to the cancellations involved in computing the cross product. I'd like to see a more convincing demonstration that this last formula is better.

Copyright ©2014, 2016 James W. Walker

However, it has recently come to my attention (for instance via a blog post by John Blackburne) that this is not the most accurate formula, especially for vectors that are nearly parallel or opposite. Consider the graph of the inverse cosine, shown at right. Its singularities at 1 and -1 cause a problem. Let us consider the vectors \(\mathbf{u} = (1, 0, 0)\) and \(\mathbf{v} = (1, x, 0)\), and examine what happens when \(x\) is small relative to 1. In this case, the angle formula becomes:

However, it has recently come to my attention (for instance via a blog post by John Blackburne) that this is not the most accurate formula, especially for vectors that are nearly parallel or opposite. Consider the graph of the inverse cosine, shown at right. Its singularities at 1 and -1 cause a problem. Let us consider the vectors \(\mathbf{u} = (1, 0, 0)\) and \(\mathbf{v} = (1, x, 0)\), and examine what happens when \(x\) is small relative to 1. In this case, the angle formula becomes: If the vectors \(\mathbf{u}\) and \(\mathbf{v}\) happen to have the same length, one can easily verify that \(\mathbf{u} + \mathbf{v}\) and \(\mathbf{u} - \mathbf{v}\) are orthogonal, and that the tangent of half the angle between \(\mathbf{u}\) and \(\mathbf{v}\) is \(\left\|\mathbf{u} - \mathbf{v}\right\| / \left\|\mathbf{u} + \mathbf{v}\right\|\). Therefore the angle between \(\mathbf{u}\) and \(\mathbf{v}\) is \(2\,\operatorname{atan2}\left(\left\|\mathbf{u} - \mathbf{v}\right\|, \left\|\mathbf{u} + \mathbf{v}\right\|\right)\). To handle the more general case, we can scale \(\mathbf{u}\) and \(\mathbf{v}\) by each other's lengths to get vectors of the same length:

If the vectors \(\mathbf{u}\) and \(\mathbf{v}\) happen to have the same length, one can easily verify that \(\mathbf{u} + \mathbf{v}\) and \(\mathbf{u} - \mathbf{v}\) are orthogonal, and that the tangent of half the angle between \(\mathbf{u}\) and \(\mathbf{v}\) is \(\left\|\mathbf{u} - \mathbf{v}\right\| / \left\|\mathbf{u} + \mathbf{v}\right\|\). Therefore the angle between \(\mathbf{u}\) and \(\mathbf{v}\) is \(2\,\operatorname{atan2}\left(\left\|\mathbf{u} - \mathbf{v}\right\|, \left\|\mathbf{u} + \mathbf{v}\right\|\right)\). To handle the more general case, we can scale \(\mathbf{u}\) and \(\mathbf{v}\) by each other's lengths to get vectors of the same length: